Solution/Process Lifecycle – Architecture is more than “draw it and forget it”

Some organizations and also methods, such as Lean or Six Sigma, have a focus on improving a single process with a well defined scope, while others take a wider, more holistic approach to processes and process improvement. This has an impact on how things are approached, where to spend time and focus on, and to which level of detail certain steps will be done in the solution/process lifecycle.

As always you can discuss the differences of these approaches and their benefits. This article introduces the “wider” perspective of the solution/process lifecycle, which applies to process improvement projects, system implementations, or any other project type where architecture will play a part in.

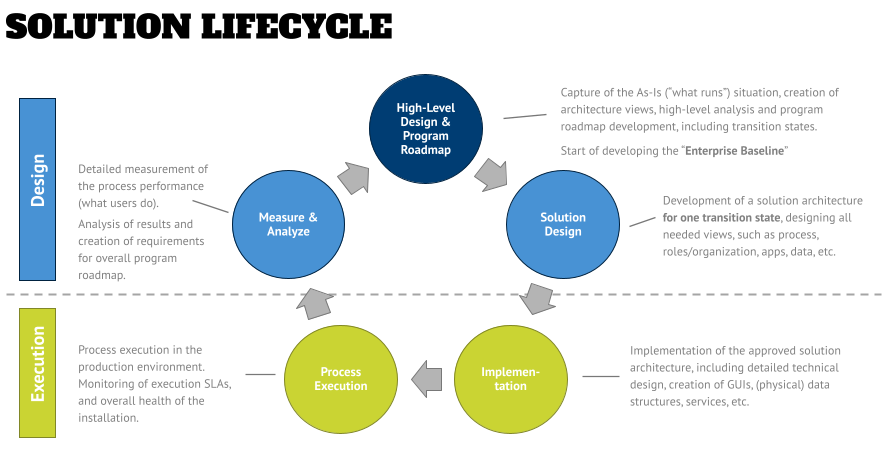

Two areas: Design and Execution

The first thing that one needs when looking at the solution/process lifecycle is to distinguish the fact that there are different tools for different purposes, but in general they fall into two categories:

- Design-time tools: these tools allow you to create blueprints, or analyze existing content. The artifacts in this area will also be re-used to compare the -long-term- execution with the initial objectives. Classic architecture tools fall into this category.

- Runtime tools: these are the actual transactional systems and support systems that allow the monitoring of them. SAP, Salesforce, Dynamics, Workday, and other systems fall into this category. Depending on the capabilities of the actual runtime systems you might have to compliment them with BI tools for monitoring.

The one mistake that I see clients make is to value one side over the other. Of course you cannot run a transaction from a modeling tool, but without a proper design that can be critiqued by others so that it shows the implementation design and plan in a granularity that there are no misunderstandings when it comes to configuration, coding, and testing, the implementation is already falling behind. On the other side a lot of architecture-focussed teams tend to be too detailed and strict in regards to what needs to be created for approval, so that the implementation gets delayed unnecessarily and people perceive architects as roadblock instead of a valued contributor.

Phase 1: Strategy and the Enterprise Baseline

In a perfect world, one would start with a clean slate and start creating the repository of what is currently in production in all architecture view as defined by your chosen framework (such as TOGAF).

In this phase the structure will be set up, the data and meta models being defined as part of the technical governance phase of the implementation, and existing content will be imported and normalized. The main idea here is to create the “Enterprise Baseline” as the always current version of “what runs”.

The good news is that you don’t have to create everything at once, which would be a Herculean task and would also reinforce the impression of the “ivory tower architects”. The way to avoid this scenario is to provide “just enough architecture” guidance (principles and a minimum of reference architecture content, such as preferred technology) and work with your current projects on the definition of a minimum architecture structure (formal and content-wise), and bring in their designs into the repository – in the “Projects/Solutions” area, and in the case of approved designs, or projects that are close to go-live/just went live into the “Enterprise Baseline” area.

You can also use modern technologies like Process Mining and Task Mining (Robotics Process Discovery) to identify current state content and create strawman models to complete an initial picture of the architecture. You should also think about importing the current strategy artifacts, so that you have a baseline set of objectives, requirements, needs, KPIs, etc. that you can map your (future) projects against.

The main purpose of the Enterprise Baseline is to provide the foundation for the identification of change and improvement needs, for example to reduce technical debt, enable process improvements, or define restructuring the organization. In addition to this analysis techniques, such as process simulation, can be applied and help with the identification and justification for a future project (to support the business case).

These identified projects then will be created as placeholders in the “Projects/Solutions” area until they are approved and further developed.

Phase 2: Solution architecture and design

In this phase of the solution/process lifecycle the solution that was identified as a potential problem solver in the previous phase and defined as a project will be designed. The result describes “what changes”.

A “solution” in this context is more than the software that is chosen, but it is the summary of all views (processes, apps, data, org, etc.) needed to implement, update, or retire one or more capabilities. Therefore, it is crucial that a project includes the strategy artifacts needed, and not directly jump into business and technology blueprinting. Having clarity about objectives, KPIs, business model, etc. will help in coordinating and resolving conflicts with other programs and projects who might have a less well defined scope and program approach.

It describes the design for one transition state and it is recommended to tailor your projects in a program accordingly by transition states related capability configurations. This will allow a controlled change management going forward, while coordinating your program’s schedule with the other related programs, so that the continuous operation of the implemented processes and systems for the end users can be ensured.

A consequence of this is that you need various roles to develop a solution – the individual domain architects (process, organization/roles, apps, data, and so on) and also a Solution Architect who is responsible for integrating the different designs into a consistent solution design. For larger programs, where multiple projects implement aspects of a capability set, you might even need a higher role – the Solution Portfolio Manager.

Once all dependencies -internally and externally- are resolved and the design is approved, it can be moved into the next phase. In addition to this, the solution design phase can also provide input to (the update of) reference architectures, for example, when a new capability and new systems are to be implemented.

Phase 3: Implementation

This phase covers the actual changes to the organization, systems, underlying technology, and processes. It is mostly driven by the technical configuration of the runtime systems, but also includes the “people side” of the solution – the org change management team that puts the organizational changes in place, coordinates with worker’s councils, etc., as well as enable users and admins in the new technology.

The architecture contributions in this phase are multiple:

- Provide guidance in how things have to be implemented, and update the solution designs accordingly if changes are needed.

- Support the testing effort by creating test cases for higher level tests – remember: every branch in a process is a test case and needs to be successfully executed.

- Synchronize/upload solution designs to Learning Management Systems such as SAP’s Enable Now, so that the content there reflects the new reality in regards to processes, app landscapes, and so on.

- Update the Enterprise Baseline with the final implemented design, so that future analysis and project definitions can be done from an up-to-date information foundation.

Phase 4: Process Execution

The scope of this phase is to implement an ongoing monitoring of the execution of the solution on a day-by-day basis. Setting up dashboards for the different stakeholder groups – for example, operators, process owners, management, etc. – will provide real-time information measuring the service level agreements for the defined processes and solutions, so that quick action can be taken if an SLA is not met.

Aligning KPIs that are visualized by the dashboards with reference content (e.g. ITIL or SCOR) will help to compare to benchmarks and get users accustomed to process thinking.

Phase 5: Measurement and analysis

This phase is similar to the previous one, and might also use the same data sources in some cases, but the objective here is to “take a step back” and look at trends and usage patterns that show up after some time. You also might want to have a look at what your users actually do (vs. what might have been mapped out in the Solution Design phase).

The main tools for this phase of the solution/process lifecycle are Process Mining and Task Mining (Robotics Process Discovery). Both look at usage and performance KPIs, but Process Mining typically is fed by log files from transactional systems that produce case IDs, activity names/events, and start/end time stamps, while Task Mining will have agents being deployed on user machines that record what users do in which program (down to the field level) and then create process models for a procedural steps – one level below the process step that you typically see in a process model (a “business-relevant, enclosed activity”).

Typical Process Mining use cases are:

- Process discovery of non-mapped processes (that will be later included in End-to-End and functional process maps in Baseline or Projects/Solutions).

- Process exploration to see the variation of a process, the frequencies of activities, the throughput times of activities and connections (bottlenecks), or any other measure analysis (e.g., cost). This is the “money shot” feature of all process mining tools because it shows the “mess” that happens in reality in a spaghetti diagram. However, this feature has become a commodity and all tools do this in various degrees of detail.

- Process compliance – is the process executed as it is described in a reference process that is uploaded from the design repository. Good tools not only show you the compliance (are all steps done in the sequence that they are prescribed in a black-and-white perspective of the world), but also calculate the “fitness score” – to which degree is the variation of the process execution aligned to the reference process? Is it 50% of 95% aligned? How do these scores change over time?

In addition to that, the tools will also show the “failures” in the executed processes, such as multiple start events or incorrect sequences (for example, when an invoice is received before a purchase order was created). Therefore process compliance is also an interesting use case for (internal) auditors. - Root-Cause Analysis: what are the reasons for the variations or non-compliance? Does the data show the reasons for this (e.g., resources were not provided in time, or a certain type of SKU (stock keeping unit) or vendor creates a performance loss.

- What/If Analysis – some process mining tools allow to change the variables in a process to create a scenario and then have the tool run through the data to see if the outcome would be a more desirable one – this can be a valuable input for the next iteration of the lifecycle where a future state design can be simulated in much more detail.

- Performance visualization – the dashboarding of KPIs that show the performance of processes, the results of them (e.g., production numbers), or the calculation of risk and compliance measures, such as the percentage of process instances in which a three-way-match has been done (the match of purchase order, goods received, and invoice). For obvious reasons, auditors are very keen on this type of information, but other stakeholders – such as process owners, application owners, as well as business line owners – will be interested in the overall performance numbers, or calculated special KPIs that are aligned to their strategic objectives.

Typically, these visualizations are not provided as pre-packaged apps by a process mining vendor, but are built as custom apps in the process mining tool (a later article will talk about the roles and activities needed to implement and operate a process mining tool). - Actions and notifications. Based on the analysis workflows and notifications can be triggered that make users aware of patterns and findings, so that the most optimal process execution can be accomplished. This could also include the triggering of manual reviews or control steps if the process variation is outside of defined patterns.

- Transformation dashboards can also be created to show the improvement over time and if the defined projects have accomplished their objectives. This brings a complete new perspective to these types of projects, because in the past these have been measured on “secondary” KPIs, such as the number of trained people, while the actual business benefits realization happened months after the project has ended and the project members were reassigned to the next project.

A good Process Mining tool also allows the export of discovered processed into the design platform (for the next improvement circle), while a Robotics Process Discovery tool provides screenshots that can be embedded into step-by-step instructions of the process models and/or be used for training purposes.

In any case the results of this phase are a trigger for the next iteration of the lifecycle.

How do architecture tools support the solution/process lifecycle?

A good professional architecture tool stack provides features that support all solution/process lifecycle phases:

- Enterprise Baseline: a central repository that allows the structuring of content as defined in the meta model; instant impact analysis by using the object-orientation in the underlying database; analysis reports for repeatable use; ad-hoc queries for quick analysis; simulation; automation of [governance] processes; dashboards for insights into governance (are we 5% or 85% done?).

- Solution Design: modeling capabilities that allow the creation of blueprints in all needed architecture views; comparison features (to show the difference to the baseline current state); if available reference content to create consistent designs; analysis features to show the improvements/differences compared to current state; versioning; if available implementation tool-specific attributes and workflows for data exchange.

- Implementation: synchronization of blueprints with implementation tools (ideally bidirectionally to manage scope); creation of test cases and tracking of those; analysis/reporting for org change management (such as role reports as input for the creation of a training curriculum or communication items); provision of auto-generated views, such as process narratives or step-by-step views; export of content for presentations and other org change management material.

- Process Execution: dashboards; “live” connectivity to source systems; actions and notifications.

- Measure and Analyze: various analysis types for process and task mining (see above); what/if scenarios; actions and notifications; bidirectional connection to the design tool.

The graphic below shows some of these technical capabilities mapped to the solution lifecycle.

Solution/process lifecycle conclusion

I think this article made it clear that architectural work is not just “draw and forget” or limited to the design phase of a project. Managed well it is a competitive differentiator by providing longer-term vision and visualization of a strategy, and the necessary information needed for solution design, implementation, and operation.

By setting up the lifecycle in the phases above, a reinforcing effect will kick in that will accelerate the maturity of design and delivery significantly.

Roland Woldt is a well-rounded executive with 25+ years of Business Transformation consulting and software development/system implementation experience, in addition to leadership positions within the German Armed Forces (11 years).

He has worked as Team Lead, Engagement/Program Manager, and Enterprise/Solution Architect for many projects. Within these projects, he was responsible for the full project life cycle, from shaping a solution and selling it, to setting up a methodological approach through design, implementation, and testing, up to the rollout of solutions.

In addition to this, Roland has managed consulting offerings during their lifecycle from the definition, delivery to update, and had revenue responsibility for them.

Roland has had many roles: VP of Global Consulting at iGrafx, Head of Software AG’s Global Process Mining CoE, Director in KPMG’s Advisory (running the EA offering for the US firm), and other leadership positions at Software AG/IDS Scheer and Accenture. Before that, he served as an active-duty and reserve officer in the German Armed Forces.